5. Danish National Championship in AI - All 3 Tasks

Prologue

What a journey it has been to compete at the Danish National Championship in AI (hosted by Ambolt). Initially, this was meant as a small thing I could do with a few friends, but ended up as a serious competition with a larger team than expected, and an actual chance of becoming Danish champions. We, Team Seje Rejer, managed to get 3rd place, which we are all very proud of, even if we were convinced that 1st place was very possible to achieve as well. We won 5,000 DKK for sharing, and were invited for the D3A conference to share our solutions alongside the other top 3 teams. It has truly been fun - and we have all learned a ton in the process. Meeting the other teams at D3A was also exciting and fun, as we got to share solutions, and got to make some new friends!

(That’s me on the left side of the podium!)

(That’s me on the left side of the podium!)

The Competition

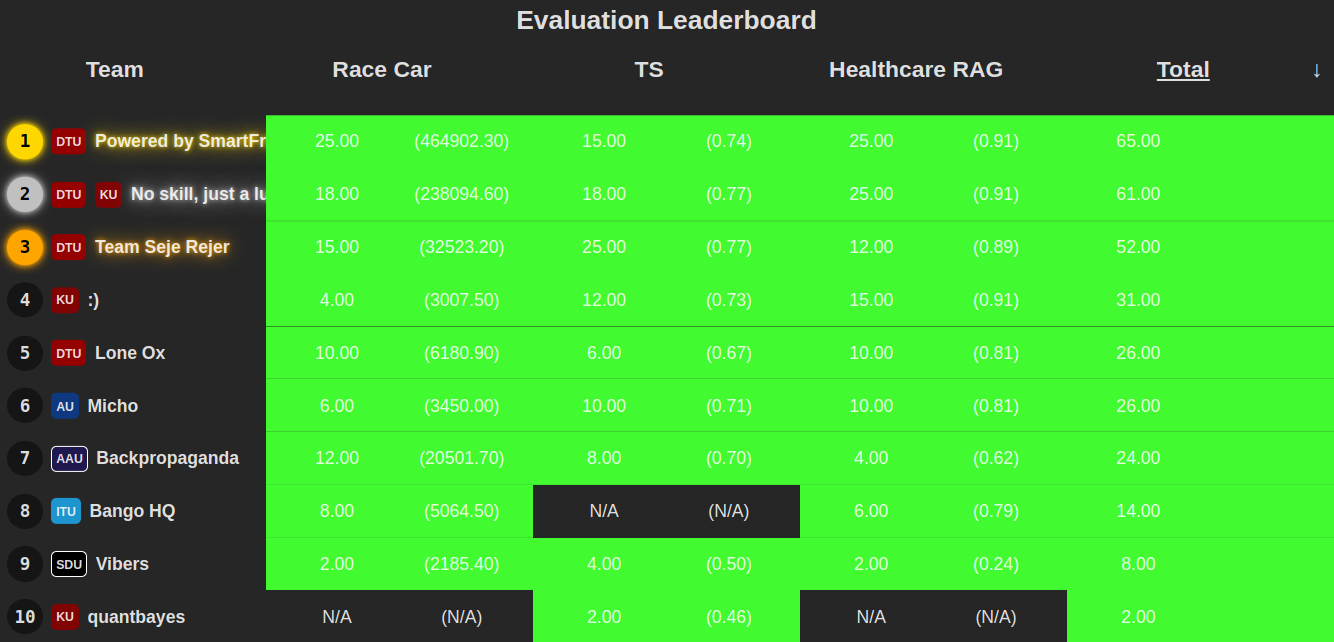

The competition is a week long hackaton where your team is evaluated across 3 different challenges. How many points you get in a task depends on your performance relative to the other contestants. The points of the indiviuals challenges are based on the Formula 1 point system; 1. place gets 25 points, 2. place gets 18 points, 3. place gets 15 points, etc.

The challenges are formatted in a way, such that we can host an endpoint to expose our model thrugh an API. The API will then have inputs sent to it, for which there are constraints on how long it can take to return a valid answer.

Throughout the week, we are able to repeatedly test our models on the validation leaderboard, which signals to everyone how people are doing so far in the competition. Although it is definitely possible to overfit a solution to the validation leaderboard, it should be a good indication as to how well you are expected to perform on the final evaluation test, for which we only have 1 attempt!

Challenge 1 - Emergency Heathcare RAG

As if decided by fate, one of the challenges was to design a RAG system, which I just happended to have spent my previous 2 months working on. I had the main responsiblity for this task.

The task is as follows:

We have 115 topics in the realm of medicine, each topic has some associated documents that will work as our reference data. We are then given a statement which has been generated based on one of those exact documents, and our job is to tell from which topic this statement belongs to, as well as determining if the statement itself is true or false

We were limited to 24GB of VRAM during inference, and the inference time must not exceed 5 seconds.

Our solution

The solution is similar to the previous work I have done with RAG (previous post), so I won’t go into too much detail.

So, we started by chunking the reference data into chunks of varying lengths and overlaps, and then they were all embedded. The first challenge was really to get a good chunking strategy, as well as finding the most powerful embedding model that fit the 24GB VRAM constraint. We ended up using a model from HuggingFace - Qwen/Qwen3-Embedding-4B. Then, using the FAISS index, we can compare an embedded version of the medicinal statement with the collection of reference embeddings to find the most similar document, and thereby the supposed topic.

And that, we fed the chunk text into an LLM with the following prompts, in order to determine whether the statement was true or false, using the reference data:

system_prompt = (

"You are a helpful fact-checking assistant."

"You will be given a reference document and a statement."

"Respond only with 'TRUE' if the statement is supported by the reference, otherwise respond 'FALSE'."

"Do not guess. You MAY use outside knowledge to determine the reliability of the statement."

)

user_prompt = (

"Reference:"

f"{reference}"

"Statement:"

f"{statement}"

"Answer:"

)

As for the LLM we used the Qwen/Qwen3-4B model.

Others’ solutions

The interesting thing is that all the top teams had somewhat different strategies, but all managed to score ~93% on the validation leaderboard. I would argue that this must be a limit defined by the uncertainty of the validation dataset. In other words, we either have no way to tell which model is actually best, OR all the models are equally good.

The most major strategies that have deviated most from our solution was:

- The use of the BM25 algorithm which is a bag-of-words (algorithmic, not deep learning) approach to the matching and retrieval

- Using only smaller chunks, such that stronger LLMs can be used. (This is because more VRAM is required when the prompt size increases)

- Using a reasoning model for “truth validation”, but manually cuts it off if its reasoning is too slow, and use a simple BERT type model in those cases

I am confident that utilizing smaller chunks, and therefore a smarter LLM, would definitely have gotten us to the 91% evaluation accuracy along the other groups. Even if most top groups scored ~93%, ours were the only team that did not score 91% on the evaluation set, but instead got 89%.

I was not very much involved in the other 2 challenges. However, I did help with cloud hosting.

Challenge 2 - Racecar

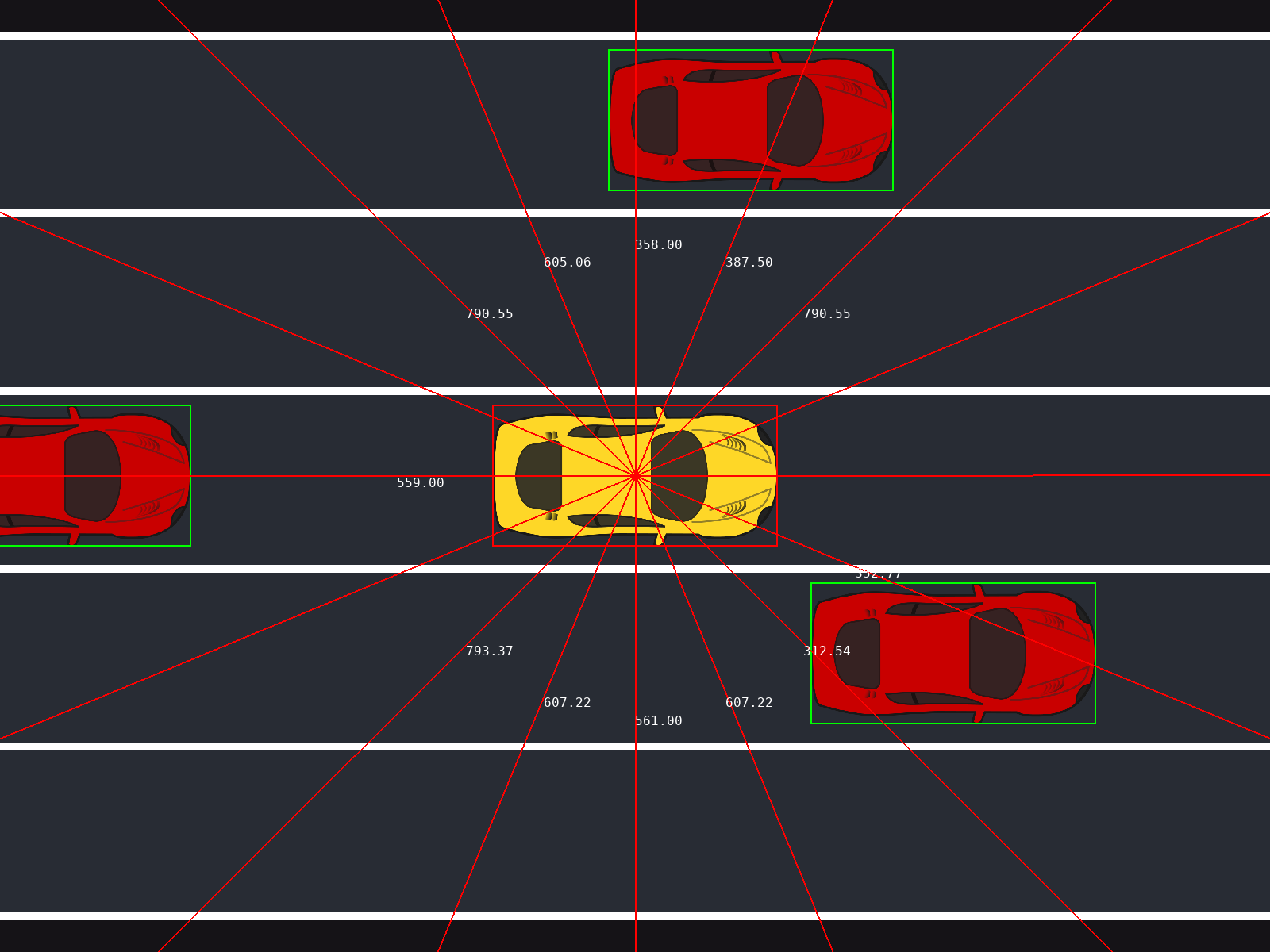

The racecar challenge was a pygame game/environment where you had to control a car through a five lane highway. In each lane, a car can spawn with a speed relatively close to the speed of the player’s car. All top teams quickly reached the same conclusion - that reinforcement learning was not really viable, and it was better to code an expert system manually. The main challenge was really, that the car could only perceive its surroundings through 16 sensors that output the distance to the closest obstacle.

With or without machine learning, it is a very hard task to consistently navigate through the track, because it’s hard to perceive cars in the adjacent lanes.

Challenge 3 - Tumor Segmentation

This task was actually being recycled from a previous year, because of some trouble with the creators of the task we were suppsoed to have. We were supposed to work with diseases and eye scan image data.

The challenge now is to segment tumors from PET-scans. We knew from that other year, that the winning team had utilized the public nnUnet model, which we conveniently used as well - and so did many others. Despite that, we still managed to score higher than the others. Luck could have been involved, but we actually did modify the default implementation a bit:

- We trained using specific image augmentations that matched the patterns seen in the validation dataset: {salt/pepper noise, horizontal flips, and small pertubations in angle}

- We created an ensemble of 5 models, where each model was trained on seperate 5 folds.

Final words

Just for fun, here is the final evaluation leaderboard: